Go beyond screen with a technical magic

/ AR camera looks simple but it’s a way to do successful branding and promote a media contents. /

So many people know what augmented reality is and have understood what they can do with it. Pokemon Go and smartphone were the flint and firestone. It’s already been over three years since 2016 summer.

Globally, Mega iOS and Android phone users enjoyed a sort of fully stacked Internet based mobile AR game not on even knowing the term; augmented reality. A GPS sensor triggered that cute characters show up here and there through the screen of users’ phone. They could capture the cute monsters by throwing a digital magic ball. User interactions are pretty simple, but in order to make such interactions and user experience happened, a backend system is working for random characters’ showing up and the location-based triggering. Additionally, each user’s score and transaction data is also being gathered to a cloud database. Though the real-time rendering, shading and ray-tracing quality of Pokemon Go is still so low, the whole system integration was enough to be named to the world first well-working playful AR game. Mobility is the most crucial nature of this game, as well.

Anatomy of an AR experience

Why I explained like above is that the scheme or principles of most AR experiences is not so different from those components of Pokemon Go. Lenses and a screen are a key part of magical transformation inbetween physical and digital world, sensors and calibration to the world are an important technological component to put digital simulation to where users are looking at in real-time. Real-time rendering performance has become crucial more and

more for pre-designed 3D objects to look much more realistic. Furthermore, user interactions enable users to do something with their own intentions, and a scalable and stable backend system is a sort of engine to make an AR experience alive like the hidden part of an iceberg. Needless to say, Internet connections are inevitable for a whole user experience to be workable and playable with others. These are major components for most AR experiences.

Under the this understanding of a mobile AR experience, from now on, I am going to share my recentest project and some lessons from it. It’s about an eSports tournament streaming and a promotion of the event and my employer’s brand name.

An eSports event and the promotional AR experiences

2019 LoL KeSPA Cup is the official name of the eSports tournament I has involved in since August 2019. The event hold in December 23rd., 2019 and ended today (Jan 5th., 2020), when the final match is being streamed on the official YouTube channel of e-sports KBS (https://www.youtube.com/watch?v=Iuxjj1a_fUc). Twenty professional and amateur League of legends teams participated in this tournament, and SANDBOX gaming and Africa Freecs are currently struggling to win the KeSPA Cup. My company, Korean Broadcasting System is an official broadcaster of this tournament, and the “e-sports KBS” is not only the official name of the YouTube channel but also a brand name of eSports streaming service. Additionally, it’s being used in the domain name of the official website: esports.kbs.co.kr.

On finalizing to introduce an eSports event I have involved in, I am supposed to explain why I think of an AR experience as a way to promote a brand or media service. In a sentence, it is because an augmented reality experience is an effective way for users or audiences to enjoy similar situations in person by their own mobile device, which is happening in a flattened two dimensional screen. As you know, a 2D screen is a traditional and typical window to watch media contents, but now is the age of 3D and audiences’ needs

of vision experiences begin to go beyond a rectangular screen. 360 degree videos, virtual reality and augmented reality seek to achieve a natural user experience in the field of vision experience. As if a voice user interface, interaction or experience is being called to NUI: natural UI, the vision experience of VR/AR/MR is also aiming to be much more natural. Human eyes has no limit. We can gaze at wherever we want to see. A limited window like a rectangular screen that has a narrow field of view is not so natural for human vision. We want to see and know what’s happening outside of the 2D frame when we watch a media contents via a television, laptop or smartphone.

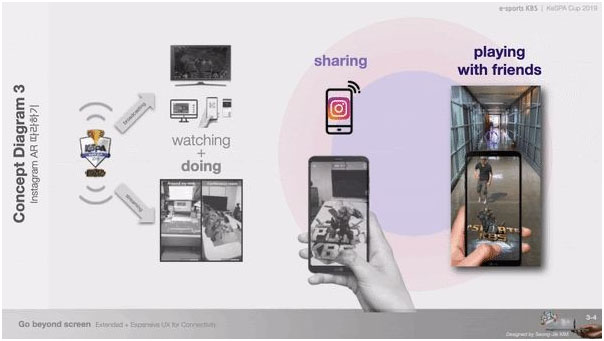

User scenario

To be elaborated, let us imagine a scenario that I want to explain how to convert 2D media contents to a 3D realistic experience. An eSports fan wants to watch a global number one professional gamer, Faker’s League of Legends playing on a YouTube streaming, so he turns on his laptop, then accesses to a YouTube channel on a Web browser. A semi-final match is being streamed on the channel, and he beings to be obsessed with the dynamic game flow with Faker’s play. By the way, a game caster let him know that he can regenerate an important battle scene of the League of Legends semi-final with his own smartphone by triggering with the official logo of an eSports tournament. After knowing that, he turns on the camera of his smartphone to try what he understand by the caster’s explanation. He finds an official logo image on the League of Legends streaming video on YouTube. As soon as he turn his camera to the logo image, an 3D animation begins from the logo image as a gate of a

holo-portal. The animation is well calibrated to his desk, which his laptop is laid on, so he can enjoy the highlight scene in the League of Legends match by 3D augmented realistic view.

My challenge

What do you think about above scenario? Because of some reason, I could not perfectly realize this scenario, but I successfully made a minimum viable package of AR experience for 2019

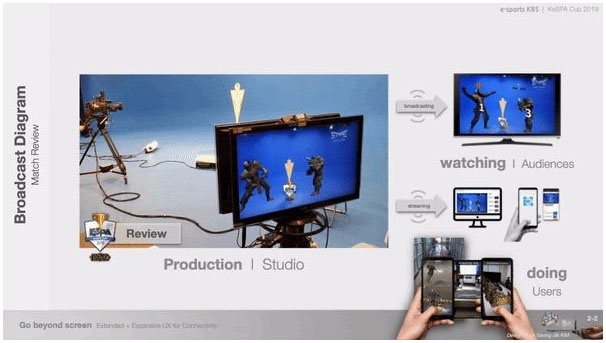

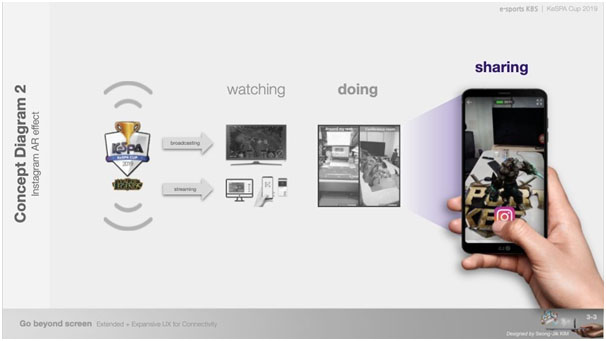

LoL KeSPA Cup including a real-time rendered AR broadcast scene powered by Unity3D plugin and RedSpy camera tracking system, an mobile AR app built by Google’s ARCore and an AR effect created with Facebook’s SparkAR studio for Instagram. A real-time rendered AR broadcast scene could be used for an inserted spot video in-between each set of a match of KeSPA Cup tournaments, a prototype mobile app built by ARcore was provided to beta testers, and Spark AR effects are distributed to general Instagram users so that they can

regenerate the same AR scene used for the spot video (real-time AR broadcast scene) by using their own Instagram camera. You can understand what I created with the following a broadcast diagram and UX concept diagram 2.

UX pyramid

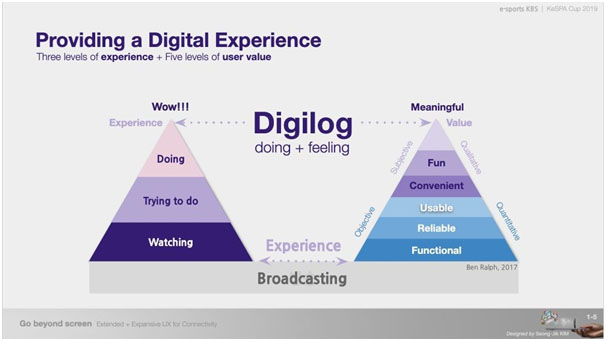

This is the three stage of user experiences with AR visualization. Watching is the first stage and this is a traditional media consuming behavior. Every media contents is provided for watching. This is the fundamental user experience and the bottom of a UX pyramid I illustrated like below.

The second stage is for trying to interact with an AR visualization in person. In this stage, users can bring some part of the live streaming to their own physical space. Their smartphone become the second screen to transform 2D video contents to 3D animation. The last stage is to capture and share. Watching, experiencing and sharing are the three stage. Augmented reality visualization is used to summon a game scene from the behind of the screen to the forefront of users’ space. Sharing a captured video could be a potentially viral trigger for

users’ friends to experience a similar 3D augmented reality scene in their own space. I expect that consecutive regenerations come true by social media connections. Users can watch 3D characters animation, so the AR scene looks alive beyond a flattened 2D screen. It make users feel that a game character jump out of the screen into their physical world. Furthermore, sharing the AR video will extend a local visualization to the global world if the AR visualization is impactful and shareable enough with more connections. The top level of UX pyramid is to deliver something meaningful and super fun for users to be willing to recommend such experiences to their acquaintances.

Supporting theory

Naturally, an embodied experience is much more memorable. In addition, user-created contents intrigue more engagement because each user’s contributions makes more and deeper interest and enthusiasm.

Naturally, an embodied experience is much more memorable. In addition, user-created contents intrigue more engagement because each user’s contributions makes more and deeper interest and enthusiasm.

Therefore, to make a traditional watching experience something doable and interactional is so effective strategy for users to remember a symbolic and iconic brand. In order to do such things, I used the official logo image of KeSPA 2019 emblem and put “e-sports KBS” logo into the 3D augmented realistic visualization. While each users try to trigger AR scene and experience fun aspects of it, they unconsciously recognize the brand name little by little, then they can remember the name with a simple character’s gesture animation. This is my intention to design a whole package of AR experiences. According to a cognitive science research “Fluid movement and Creativity” (Jan 21, 2012 / reported by Michael L. Slepian and Nalini Ambady), both scientists found that fluid movement influenced cognitive processing in a human brain.

This means that an embodied action strengthen memory. I believe that this is a verified truth, so I would like to adopt the result of the research to this AR project. The users are supposed to concentrate on triggering this AR animation and moving their smartphone, so such physical movement can intrigue their cognitive processing.

It’s time to wrap up!

There are seven components to make an AR experiences workable in real-time.

- Lenses (camera or screen)

- Sensors (GPS, Accelerometer, and Gyroscope)

- Calibration algorithm

– Currently, ML and SLAM is being integrated for faster and effective creation of a sort of digital twin

- Real-time rendering on devices (GPU and CPU)

– Foveated rendering with the eye-tracking is currently challenging for an efficient performance

– Unity3D and Unreal are super widely used for this because of their awesome rendering quality

– Like an engine to make 3D digital scene much more realistic including lighting, shading, etc.

- User interactions (If there were no interactions, it’s no difference from traditional media experience)

– Users want to control whatever they see in a new media.

– This needs and desire delegate POV to users’ will (Just watching is boring)

- Backend system (Data is a key)

– Computer vision also needs a huge size of database.

– Users’ interaction produce a great amount of data.

- Connections (No Internet, no service)

– 5G mobile network will be a better way to gather and distribute such data.

– Cloud system and MEC (Mobile Edge Computing) will be adopted to newly developed mobile devices.

Which kinds of desire have made digital media services popular?

- The age of prosumers who has desire of production on consuming media

– Almost 80 percent of online contents is user generated images and videos.

- Short-form video has become more dominant to Millenials and Z-generation

– Snapchat, Qwai, TikTok, and Instagram Stories

– These are also a kind of video driven UGC platform

- Getting more interest and enthusiasm to contents that users are directly involved in

– Parody, Imitation, Cover-dance, and so on

– Share, reply, like, and so on

- Doing is more memorable than just watching (Embodiment of memory)

What I have done for an eSports event: 2019 LoL KeSPA Cup tournament?

- To direct a project that challenges to adopt Unity3D-RedSpy plugin for an AR live broadcasting

– RedSpy optical camera tracking sensors sends tracking data to a Unity3D render engine

– The movement of a virtual camera in the Unity scene is calibrated to the tracking data sent from a real camera movement

– Put a simple 3D character’s winning gesture animation on the main stage of the KeSPA Cup tournament by augmented reality technology in real-time

- To build a mobile AR app by using Google ARCore

– Used the same Unity animation scene as the scene I designed for the live AR broadcasting above

– Real-time shadow generation reflected the physical lighting condition

– A prototype Android package file is provided to beta-testers

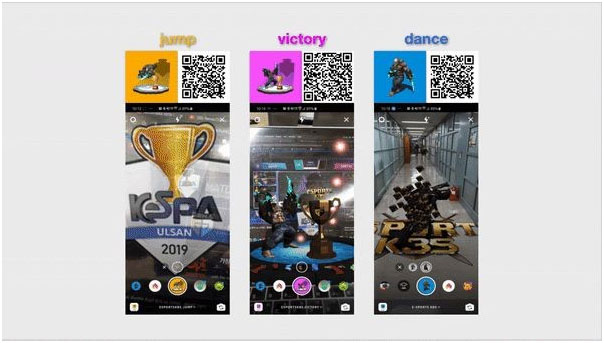

- To create three Spark AR effects with Spark AR studio for a promotional purpose|

– eSportsKBS.jump, eSportsKBS.victory and eSportsKBS.dance

– Officially approved and released to Instagram platform

– Contained visual elements of the official KeSPA emblem and e-sports KBS logo image

– Guide to experience, capture and share users’ creation by an online event on esports.kbs.co.kr

– You can try these effects by accessing below QR codes with your smartphone

To adopt a gamification strategy to motivate more users’ participation

To adopt a gamification strategy to motivate more users’ participation

– by asking to share their own Instagram pots on including hashtags:

#eSportsKBS, #KeSPA2019, #kbsARmaster, etc.

– by preparing many different levels of participation and prizes by such contributions: AR master, Creator, Dr.stranger, sharing master, dance follower, and so on

– QR code for accessing the event page is on the right ▶▶▶

In conclusion, this challenge is pretty meaningful and valuable project for me. Augmented Reality is challenging to be a part of a live streaming of an eSports tournament in South Korea. A semi-final match of this tournament video link is here: https://youtu.be/Ka1GGBwPqro

Moreover, a real-time rendering engine, Unity3D is being used for broadcasting, and the camera tracking is integrated to a virtual camera in Unity 3D scene. In addition, the rendering outcome is as if there is a certain character’s jumping gesture with low-poly KeSPA cup on the stage (the lower) However, the real stage is like the upper video.

If I had not get support from Unity Technologies Korea and Yebon Korea, I could not have achieve like this. Awesome and skillful engineers enthusiastically have participated in this project. Still needs to improve, but we are very happy to achieve

like these and share them with you. You can watch more video footage on my facebook post:

https://m.facebook.com/story.php?story_fbid=2931175193613198&id=100001622371908

Additionally, There are another use cases of mobile AR. Plus, more and longer demo videos of eSportsKBS Spark AR effect are here: https://m.facebook.com/story.php?story_fbid=2930849110312473&id=100001622371908

Every eSports event has its own #brand #emblem or #logo. It’s used everywhere in the venue. Furthermore, it’s also shown up on the screen of twitch, facebook, youtube and even television during a broadcast on-air or an online streaming. If many eSports fans and audiences could use an AR camera with their own smartphone, they are able to create their own AR experience by triggering the target image pre-designed and connected to a signature

3D animation. In order to trigger a jumping character animation, I used my STAFF badge, the banner of a ticket booth and the KeSPA logos on the video wall of the main stage and a PRESS room. If you could find the KeSPA Cup 2019 logo by googling or searching via YouTube, you can try this effect with your own smartphone. Thanks for reading.

About Blog Writer :

Seong-Jik KIM

Principal UX/UI Designer, VR/AR Designer

KBS (Korean Broadcasting System)